基于DeepSeek的智能新闻聚合系统开发手记

2025年2月10日

编程

# 项目目的

本系统旨在实现以下核心功能:

1. **自动化新闻采集**:通过RSS订阅源定时抓取最新资讯

2. **智能摘要生成**:利用DeepSeek大模型进行内容提炼

3. **多平台推送**:支持邮件等多种信息投送方式

4. **灵活部署**:提供GitHub Actions/Docker双部署方案

# 开发思路

1. 使用配置文件配置RSS订阅源。

2. 定时(每10分钟)手机订阅源更新情况,去重后保存在文件中。

3. 每天(固定时刻)使用DeepSeek总结新闻内容,按照**关键新闻对象**进行分类。

4. 过期新闻文件自动删除。

> **注意**

>

> 原本计划使用Outlook邮箱发送,但认证方式过于复杂,改用了自建邮箱认证,因此只能发送到自建邮箱中。

# 完整代码

## Python 脚本

```python

import os

import tomli

import json

import feedparser

import requests

import smtplib

import markdown

from email.header import Header

from email.mime.text import MIMEText

from email.mime.multipart import MIMEMultipart

from datetime import datetime

from pathlib import Path

# 新增数据存储路径

DATA_DIR = Path(__file__).parent / "data/daily_articles"

DATA_DIR.mkdir(parents=True, exist_ok=True)

def load_config():

with open('config.toml', 'rb') as f:

config = tomli.load(f)

# 环境变量替换

config['deepseek_api']['api_key'] = os.getenv('DEEPSEEK_API_KEY', config['deepseek_api']['api_key'])

config['email']['sender'] = os.getenv('EMAIL_SENDER', config['email']['sender'])

config['email']['username'] = os.getenv('EMAIL_USERNAME', config['email']['password'])

config['email']['password'] = os.getenv('EMAIL_PASSWORD', config['email']['password'])

config['email']['receiver'] = os.getenv('EMAIL_RECEIVER', config['email']['receiver'])

return config

def fetch_rss_feeds(feeds):

articles = []

for feed in feeds:

d = feedparser.parse(feed['url'])

for entry in d.entries[:10]: # 取最新10条

articles.append({

'title': entry.title,

'summary': entry.get('summary', ''),

'link': entry.link

})

return articles

def summarize_with_deepseek(articles, config):

content = "\n\n".join([f"标题:{a['title']}\n摘要:{a['summary']}" for a in articles])

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {config['api_key']}"

}

payload = {

"model": config['model'],

"messages": [

{

"role": "user",

"content": f"请用中文总结以下新闻内容,要求:\n"

f"1. 按照关键新闻对象进行分类\n"

f"2. 总结关于关键新闻对象所有新闻内容中的关键信息\n"

f"3. 使用简洁的书面语言\n"

f"内容:\n{content[:15000]}" # 限制内容长度

}

],

"temperature": config['temperature'],

"max_tokens": config['max_tokens']

}

response = requests.post("https://api.deepseek.com/chat/completions",

json=payload,

headers=headers).json()

return response['choices'][0]['message']['content']

def send_email(content, config):

msg = MIMEMultipart('alternative')

msg['Subject'] = Header('每日新闻摘要', 'utf-8')

msg['From'] = config['sender']

msg['To'] = config['receiver']

html_content = markdown.markdown(content)

msg.attach(MIMEText(html_content, 'html', 'utf-8'))

with smtplib.SMTP(config['smtp_server'], config['smtp_port']) as server:

server.starttls()

server.login(config['username'], config['password'])

server.sendmail(config['sender'], [config['receiver']], msg.as_string())

def fetch_and_save():

"""每10分钟执行的数据抓取任务"""

config = load_config()

articles = fetch_rss_feeds(config['rss_feeds']['entries'])

# 按日期存储

today = datetime.now().strftime("%Y-%m-%d")

output_file = DATA_DIR / f"{today}.json"

# 追加模式写入

with open(output_file, "a") as f:

for article in articles:

f.write(json.dumps(article, ensure_ascii=False) + "\n")

def daily_summary():

"""每日汇总任务"""

config = load_config()

today = datetime.now().strftime("%Y-%m-%d")

input_file = DATA_DIR / f"{today}.json"

# 读取当日所有文章

articles = []

with open(input_file) as f:

for line in f:

articles.append(json.loads(line))

# 去重处理

seen = set()

unique_articles = []

for a in articles:

ident = a['link']

if ident not in seen:

seen.add(ident)

unique_articles.append(a)

# 生成摘要并发送

summary = summarize_with_deepseek(unique_articles, config['deepseek_api'])

send_email(summary, config['email'])

# (可选) 清理历史数据

keep_days = 7 # 保留最近7天数据

for f in DATA_DIR.glob("*.json"):

if (datetime.now() - datetime.fromtimestamp(f.stat().st_mtime)).days > keep_days:

f.unlink()

if __name__ == "__main__":

import sys

if sys.argv[1] == "crawl":

fetch_and_save()

elif sys.argv[1] == "summary":

daily_summary()

else:

print("Usage: python news_bot.py [crawl|summary]")

```

## 配置文件

```toml

[rss_feeds]

entries = [

{ name = "环球网 - 国际", url = "https://rss.huyg.site:8443/huanqiu/news/world" },

{ name = "观察者网", url = "https://rss.huyg.site:8443/guancha/headline" },

{ name = "参考消息 - 国际", url = "https://rss.huyg.site:8443/cankaoxiaoxi/column/gj" },

]

keep_days = 7

[deepseek_api]

api_key = "${DEEPSEEK_API_KEY}"

model = "deepseek-chat"

max_tokens = 2000

temperature = 0.7

[email]

sender = "${EMAIL_SENDER}"

username = "${EMAIL_USERNAME}"

password = "${EMAIL_PASSWORD}"

receiver = "${EMAIL_RECEIVER}"

smtp_server = "huyg.site"

smtp_port = 587

```

## Dockerfile

```dockerfile

FROM python:3.10-slim

ENV TZ=Asia/Shanghai

RUN ln -snf /usr/share/zoneinfo/$TZ /etc/localtime && echo $TZ > /etc/timezone

# 安装cron

RUN apt-get update && apt-get install -y cron && rm -rf /var/lib/apt/lists/*

WORKDIR /app

COPY . .

# 安装依赖

RUN pip install feedparser tomli requests markdown

# 配置cron

COPY cronjobs /etc/cron.d/

RUN chmod 0644 /etc/cron.d/* && \

crontab /etc/cron.d/crawl.cron && \

crontab /etc/cron.d/daily_summary.cron

# 数据卷

VOLUME /app/data

# 启动cron并保持容器运行

CMD ["sh", "-c", "cron && tail -f /var/log/cron.log"]

```

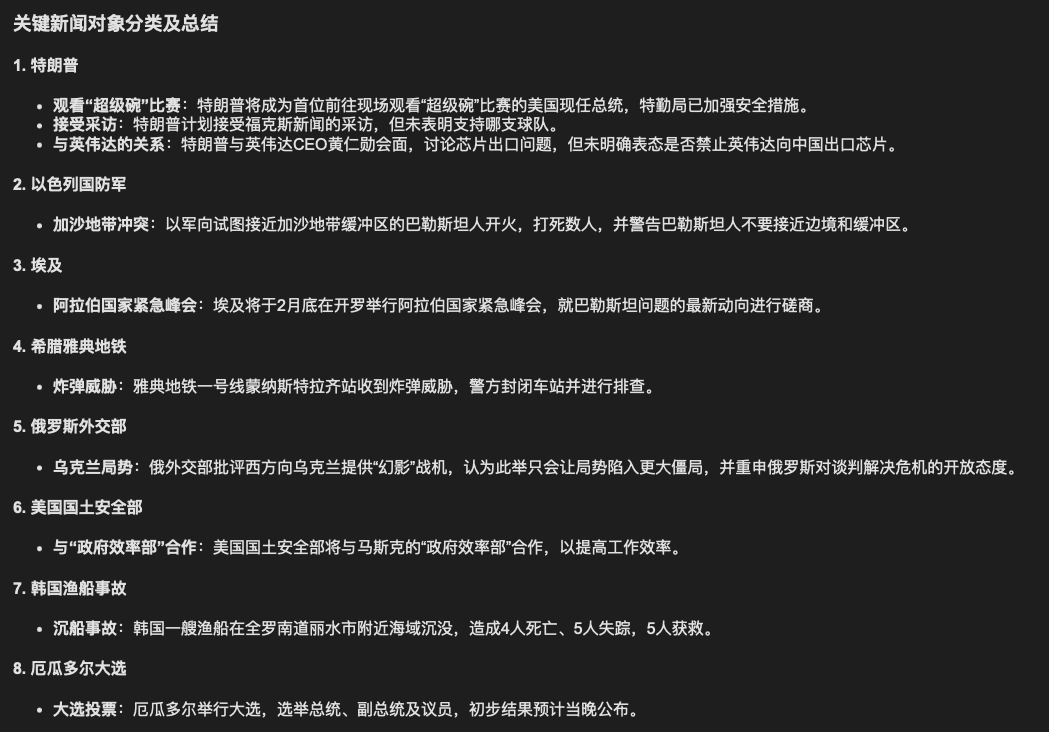

# 运行效果

感谢您的阅读。本网站 MyZone 对本文保留所有权利。